The Implications of Artificial Intelligence in Contemporary Military Planning

November 14, 2025 - Written by Seb Fryer

Introduction

The incorporation of AI into military doctrine marks one of the most significant transformations in warfare since the introduction of nuclear weapons and cyber capabilities. AI-driven technologies now underpin critical components of national defence systems, from real-time threat detection to autonomous targeting and logistics optimisation. The central research question guiding this report is: To what extent does artificial intelligence strengthen or destabilise contemporary military planning from a geopolitical perspective?

AI’s capacity to process vast quantities of data, simulate operational environments, and support decision-making introduces both efficiencies and vulnerabilities. While it promises faster, more informed strategic decisions, it also risks human oversight, the embedding of algorithmic bias, and the enabling of lethal autonomy. This duality inherent within AI lies at the heart of modern strategic competition and defines the future of warfare. Globally, over 60 national militaries are now investing in AI-enabled systems for intelligence, surveillance, and reconnaissance (ISR) purposes.

Contextual Analysis

Technological innovation has historically served as a determinant of strategic power. From the industrial revolution to the nuclear age, each leap in technology has redefined the conduct and calculus of war. Today, AI represents the next inflection point.

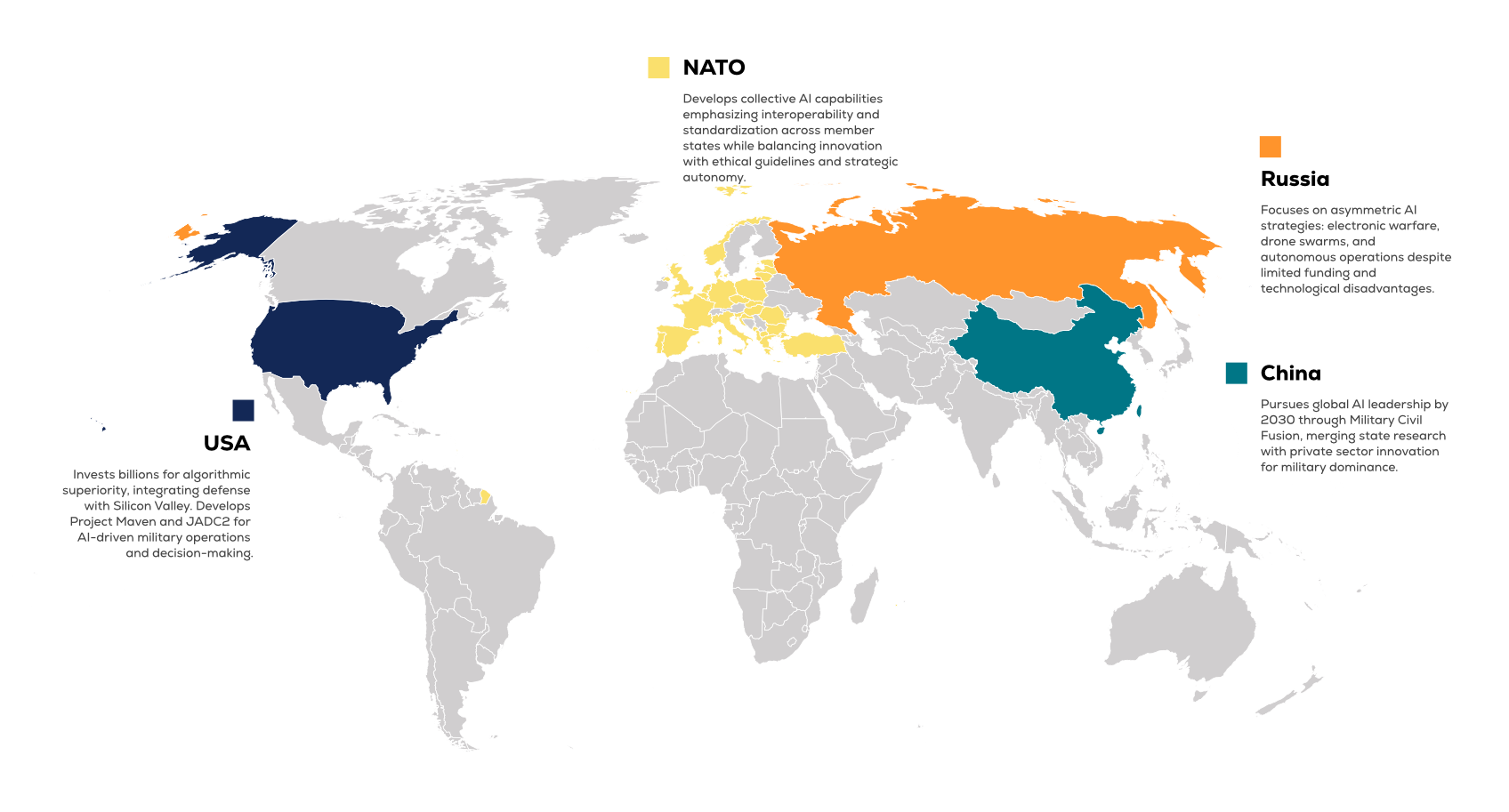

The United States, China, and Russia are investing billions in AI research to secure “algorithmic superiority” the ability to outthink adversaries through machine learning, automation, and predictive analytics.

In fiscal year 2024, the U.S. Department of Defence allocated approximately $1.8 billion to AI-related defence projects, reflecting Washington’s determination to embed AI across all command and control functions. China’s Military Civil Fusion strategy, promoted under Xi Jinping, embeds AI research in both state and private enterprise, making companies such as Baidu and Huawei central to the People’s Liberation Army’s modernisation agenda. Russia, though trailing technologically, has focused its limited resources on integrating AI into autonomous drone warfare and electronic operations, as seen in the conflict with Ukraine.

Yet, these developments have emerged within a regulatory vacuum. International law and treaties, including the Geneva Conventions and the Convention on Certain Conventional Weapons (CCW), have not evolved quickly enough to address the advent of algorithmic warfare. The lawmakers within government are woefully behind in current knowledge of AI’s capabilities and advancements. The result is a “grey zone” of accountability, in which AI-enabled weapons can be deployed with very limited international or domestic oversight. This absence of governance mechanisms accelerates an AI arms race and intensifies the militarisation of emerging technologies.

Analysis

Decision-Making Processes

AI’s ability to process data in real-time enhances command decision-making by enabling faster and more precise responses to emerging threats. Decision-support systems such as the US Defense Advanced Research Projects Agency’s (DARPA) Project Maven exemplify this capability, using computer vision to interpret drone surveillance imagery and assist targeting decisions. However, the delegation of operational judgement to AI systems introduces existential risks. Human commanders may develop automation bias, the tendency to over-trust algorithmic outputs—thereby undermining moral and strategic oversight. Should an algorithm misinterpret data or be compromised by adversarial inputs, the resulting miscalculations could have catastrophic human consequences.

Moreover, the opacity of AI’s “black box” processes complicates accountability. When an autonomous system’s decision cannot be explained transparently, moral and legal responsibility for wartime actions becomes diffuse. This erodes the foundational principle of command responsibility enshrined in international humanitarian law.

Intelligence and Data Analysis

AI is revolutionising intelligence operations by integrating satellite imagery, electronic signals, and open-source data. Machine learning systems can quickly identify battlefield trends invisible to human analysts, predict troop movements, and detect cyber intrusions. Ukraine’s application of AI-enhanced satellite imagery, supported by Palantir and Maxar, demonstrates this transformation in real combat conditions. Yet, AI-driven intelligence also introduces vulnerabilities. Algorithms may amplify biases embedded in training data, misclassify targets, or fall victim to deliberate manipulation. In 2023, NATO’s Strategic Command reported a 42 per cent increase in cyber incidents targeting AI-enabled defence systems compared to 2021, underscoring the susceptibility of such technologies to adversarial interference. Dependence on private-sector providers such as Google, Microsoft, and Palantir for defence applications further complicates accountability. This outsourcing of strategic intelligence infrastructure raises critical questions regarding state sovereignty and the privatisation of warfare.

Autonomous Systems and Platforms

Autonomous systems constitute the most visible manifestation of AI in warfare. From unmanned aerial vehicles to robotic naval platforms, these technologies are transforming operational doctrines by extending range, endurance, and precision while reducing human exposure to combat risks. By 2025, the global market for autonomous military platforms is projected to reach £12.6 billion, with drones accounting for almost 70 per cent of total expenditure. Yet, the delegation of lethal decision-making to autonomous systems fundamentally challenges established norms within international humanitarian law. If a weapon independently identifies and engages a target, the question of accountability, whether legal, moral, or political, becomes deeply ambiguous. Furthermore, the prospect of autonomous escalation, in which AI systems interpret threats and retaliate without human oversight, raises fears of machine-driven conflict initiation. Loitering munitions such as Israel’s Harpy or Russia’s Lancet, already operating at the margins of human supervision, exemplify this grey zone between automation and autonomy.

Key Players and Stakeholders

The geopolitical contest over military AI is dominated by three major powers: the United States, China, and Russia. The United States retains a competitive edge through its integration of defence innovation with Silicon Valley. Initiatives such as the Algorithmic Warfare Cross-Functional Team (AWCFT) and the Joint All-Domain Command and Control (JADC2) programme aim to create seamless interoperability between air, land, sea, and cyber domains. China’s New Generation Artificial Intelligence Development Plan (2017) sets the objective of achieving global AI supremacy by 2030, linking military modernisation to civilian innovation. Russia, in contrast, pursues asymmetric strategies, using AI to enhance electronic warfare, drone swarms, and information operations .

AI Defence Spending by Major Powers (2024)

Other stakeholders include NATO and the European Union, both of which are developing ethical frameworks for AI deployment through the Responsible AI Strategy for Defence (2021). The United Nations and International Committee of the Red Cross (ICRC) advocate for legal constraints on fully autonomous weapons. Meanwhile, private technology corporations remain pivotal actors, supplying dual-use technologies that both empower and constrain state control.

Military, Economic, and Social Dimensions

Militarily, AI has redefined logistics and operational tempo. Predictive maintenance algorithms reduce equipment downtime and enhance combat readiness, while autonomous resupply systems sustain forces in contested environments. However, the increasing reliance on software-controlled command systems introduces new vectors for cyberattack and operational paralysis.

Economically, AI-driven defence expenditure has become a defining feature of global rearmament. The global military AI market is projected to exceed £20 billion annually by 2030, intensifying competition and deepening divides between technologically advanced states and those left behind. The concentration of AI expertise within a handful of private corporations creates dependencies that weaken state autonomy and blur the line between national and corporate security.

As shown in chart above, the largest share of military AI spending is allocated to autonomous systems (35%), followed by Intelligence, Surveillance, and Reconnaissance (ISR) (25%) and cyber defense (20%).

Socially, the militarisation of AI raises profound questions about legitimacy and human agency. Civil society movements such as Stop Killer Robots highlight public unease over algorithmic targeting and the automation of lethal force. The convergence of surveillance technologies, predictive policing, and military AI systems further threatens to normalise a permanent state of algorithmic control, eroding civil liberties in peacetime societies.

Opportunities and Risks

AI offers tangible strategic benefits. Enhanced situational awareness, faster decision cycles, and reduced human exposure to risk promise to improve both human efficiency and survivability in complex operations. AI technologies also contribute to humanitarian applications, such as disaster response and post-conflict reconstruction. Nevertheless, the risks are profound. Algorithmic bias, data manipulation, and overconfidence in machine outputs could produce severe misjudgements. The proliferation of autonomous systems risks lowering the threshold for conflict initiation, as machine-speed decision cycles compress the time available for human diplomacy. If unchecked, AI militarisation could create a new form of digital deterrence, a balance of power based not on nuclear stockpiles but on algorithmic superiority. The opacity and unpredictability of such systems make this an inherently unstable foundation for global security.

Policy Recommendations

Governments and international institutions should pursue a coherent framework for AI governance in defence contexts. First, global arms control mechanisms must be extended to encompass lethal autonomous weapons, with verifiable compliance provisions under the CCW. Second, human-in-the-loop oversight should be legally mandated for all lethal decision-making, ensuring moral accountability. Third, states must strengthen cyber resilience by investing in quantum-secure communications to safeguard AI networks. Further, the explainability of military AI should be improved through transparent, auditable algorithmic design. Ethical research standards must be institutionalised through cross-national boards uniting militaries, academia, and civil society. Finally, international collaboration, via NATO, ASEAN, and the United Nations, should promote common testing, certification, and responsible use frameworks

Conclusion

Artificial Intelligence represents both a revolution in military capability and a potential disruption to global stability. Its integration into defence systems promises unmatched precision, speed, and strategic insight, but also risks eroding human control, ethical restraint, and international trust. The geopolitical implications of military AI hinge not merely on technological capability but on governance: how states choose to deploy, regulate, and cooperate in its use. Without shared norms and ethical oversight, AI could usher in a new era of automated warfare. The challenge for policymakers is to reconcile innovation with enduring principles of humanity, accountability, and peace. If the twentieth century was defined by nuclear deterrence, the twenty-first may well be shaped by algorithmic deterrence and by the question of whether humanity remains in command of its machines.

Written by Seb Fryer

Security & Terrorism Research Desk